Picode: Inline Photos Representing Posture Data in Source Code

'11-13 IDE Robot ACM CHI '13

Abstract

Current programming environments use textual or symbolic representations. While these representations are good at describing logical processes, they are not appropriate for representing human and robot posture data which is necessary for handing gesture input and controlling robots.

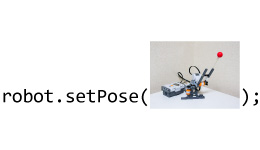

To address this issue, we propose a text-based development environment integrated with visual representations: photos of human and robots. With our development environment, the user first takes a photo to bind it to a posture data. Then, s/he drag-and-drops the photo to the code editor, where it is shown as an inline image.

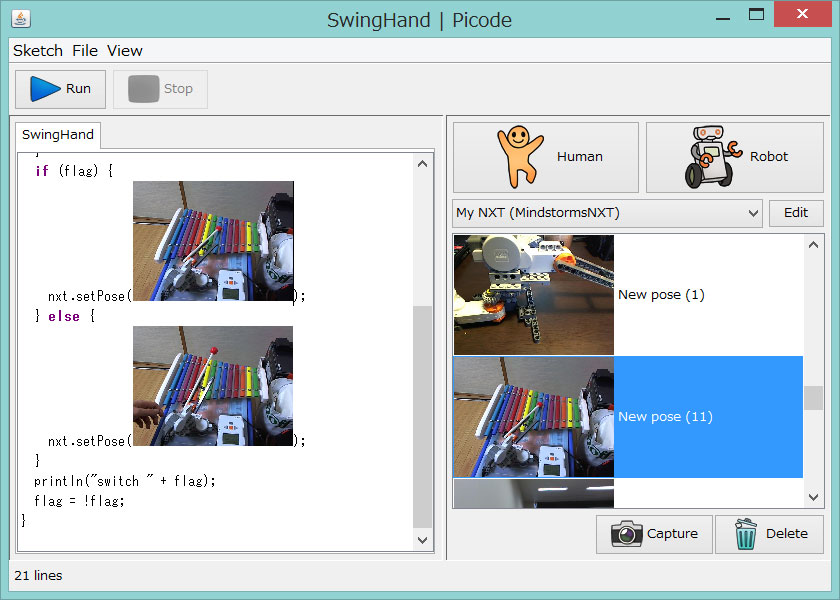

Picode IDE

Our prototype implementation consists of three main components: the code editor, the pose library, and the preview window. First, the user takes a photo of a human or robot in the preview window. At the same time, posture data is captured and the dataset is stored in the pose library. Next, s/he drag-and-drops the photo from the pose library into the code editor, where the photo is shown inline. Then, s/he can run the application. S/he can also distribute the source code bundled with the referenced datasets so that others can run the same application on our development environment.

During the coding phase, the programmer can benefit from a software library tightly coupled with the IDE. The library provides a set of API that enables easy control of robots. The main functions are shown below. Other APIs are listed in Javadoc.

- Pose Robot.getPose()

- returns the current pose data.

- float Pose.distance(Pose pose)

- calculates the distance between the two poses. [0.0-1.0]

- boolean Pose.eq(Pose pose, float threshold)

- returns whether the two poses can be thought of as identical or not. (returns whether the distance between the two poses is less than the threshold or not.)

- boolean Robot.setPose(Pose pose)

- set the current pose to the specified data.

- Action Robot.action()

- start building an action for the robot.

- Action Action.pose(Pose pose)

- add this pose to the end of this action.

- Action Action.stay(int ms)

- wait for the specified time at the end of this action.

- ActionResult Action.play()

- play this action.

Publication

- Jun Kato, Daisuke Sakamoto, Takeo Igarashi, "Picode: Inline Photos Representing Posture Data in Source Code", In CHI '13: Proceedings of the SIGCHI conference on Human Factors in Computing Systems. pp.3097-3100, Apr. 2013. ACM CHI 2013 Best Paper Honorable Mention Award. BibTeX